LS-DYNA A combined Implicit/Explicit solver. One scalable code for solving highly nonlinear transient problems enabling the solution of coupled multi-physics and multi-stage problems. Reduces customer costs by enabling massively parallel processing, with discounting per core or job pricing. 04.16 1 서복 04.16 2 서복 SEOBOK.2019.WEBRip.초고화질.x264.AAC 04.16 3 더블패티 Double.Patty.2021.KOREAN.초고화질.AMZN.WEBRip.DDP2.0.x264-Imagine 00:12 4 넷플 최신 블.랙.핑.크 10억뷰의 그녀들 04.16 5 3월 신작 드라마 아이유 - 아무도 없는 곳 - 초고화질 초고화질. 04.16 1 서복 04.16 2 서복 SEOBOK.2019.WEBRip.초고화질.x264.AAC 04.16 3 더블패티 Double.Patty.2021.KOREAN.초고화질.AMZN.WEBRip.DDP2.0.x264-Imagine 00:12 4 넷플 최신 블.랙.핑.크 10억뷰의 그녀들 04.16 5 3월 신작 드라마 아이유 - 아무도 없는 곳 - 초고화질 초고화질. 分类:Ls-Dyna材料失效准则定义 有些材科类型中有关于夫效准则的定义,但是也有些材科类型没有失效准 则的材科类型,这时需要额外的失效准则定义,与材科参数一块定义材科特 性。需要用到.matadderosion关键字.

LS-DYNA is the first commercial code to support IGA through the implementation of generalized elements and then keywords supporting non-uniform rational B-splines (NURBS). Many of the standard FEA capabilities, such as contact, spot-weld models, anisotropic constitutive laws, or frequency domain analysis, are readily available in LS-DYNA with.

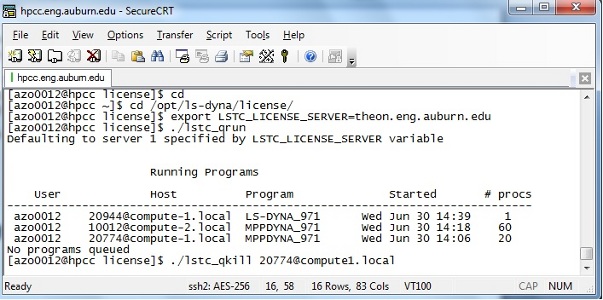

Both 32-bit and 64-bit executables in both serial and parallel versions are provided. The MPIparallel versions use OpenMPI as their MPI parallel library, the HPC Center's default version ofMPI. The serial executable can also be run in OpenMP (not to be confused with OpenMPI) node-local SMP-parallel mode. The names of the executable files in the '/share/apps/lsdyna/default/bin'directory are:

Those with the string 'mpp' in the name are the MPI distributed parallel versions of the code. Theinteger (32 or 64) designates the precision of the build. In the examples below, depending on thetype of script being submitted (serial or parallel, 32- or 64-bit), a different executable will be chosen. The scaling properties of LS-DYNA in parallel mode are limited, and users should notcarelessly submit parallel jobs requesting large numbers of cores without understanding howtheir job will scale. A large 128 core job that runs only 5% faster than a 64 core job is a wasteof resources. Please examine the scaling properties of your particular job before scaling up.

As is the case with most long running applications run at the CUNY HPC Center, whether parallelor serial, LS-DYNA jobs are run using a PBS batch job submission script. Here we provide someexample scripts for both serial and parallel execution.

Note that before using this script you will need to setup the environment for LS-DYNA. On Andy 'modules' is used to manage environments. Setting up LS-DYNA is done with

First an example serial execution script(called say 'airbag.job') run at 64-bits using the LS-DYNA 'airbag' example ('airbag.deplo.k') fromthe examples directory above as the input.

Details on the SLURM options at the head of the this script file are discussion below,but in summary '-q production_qdr' selects the routing queue into which the job willbe placed, '-N ls-dyna_serial' sets this job's name, 'nodes=1 ntasks=1 mem=2880'nodes-requests 1 SLURM resource chunk that includes 1 cpu and 2880 MBytes of memory, allows SLURM to put the resources needed for the job any where on theANDY system.

The LS-DYNA command line sets the input file to be used and the amount of in-corememory that is available to the job. Note that this executable does NOT include thestring 'mpp' which means that it is not the MPI executable. Users can copy the 'airbag.deploy.k'file from the examples directory and cut-and-paste this script to run this job. It takes a relatively sort time to run. The SLURM command for submitting the job would be:

Here is a SLURM script that runs a 16 processor (core) MPI job. This script isset to run the TopCrunch '3cars' benchmark which is relatively long-running usingMPI on 16 processors. There are a few important differences in this script.

Ls Dyna Manual

Focusing on the difference in this script relative to the serial SLURM script above. First, the '-l select' linerequests not 1 SLURM resource chunk, but 16 each with 1 cpu (core) and 2880 MBytes of memory. Thisprovides the necessary resources to run our 16 processor MPI-parallel job. Next, the LS-DYNA commandline is different. The LS-DYNA MPI-parallel executable is used (ls-dyna_mpp64.exe), and it is run with the help of the OpenMPI job submission command 'mpirun' which sets the number of processorsand the location of those processors on the system. The actually LS-DYNA key words also add thestring 'ncpu=16' to instruct LS-DYNA that this is to be a parallel run.

Running in parallel on 16 cores in 64-bit mode on ANDY, the '3cars' case takes about 9181 secondsof elapsed time to complete. If the user would like to run this job, they can grab the input files outof the directory '/share/apps/lsdyna/default/examples/3cars' on ANDY and use the above script.

Ls Dyna Online Training